Washington’s new high‑risk AI bill, HB 2157, has dropped and it reads like a direct translation of the Attorney General’s AI Task Force Interim Report into binding state law. Everything we warned about in the Task Force process is now showing up in legislative form: expanded government power, ideological “bias testing,” and sweeping new mandates on employers, healthcare providers, and private innovators.

This is not a neutral “AI safety” bill. It is the regulatory framework the AG’s office has been building toward for the last two years and it will impact every business that uses AI in hiring, healthcare, housing, finance, or public‑facing services.

Below is what you need to know.

HB 2157: The Task Force Report, Now with Legal Teeth

The bill mirrors the Task Force’s eight major recommendations almost point‑for‑point. Here’s how:

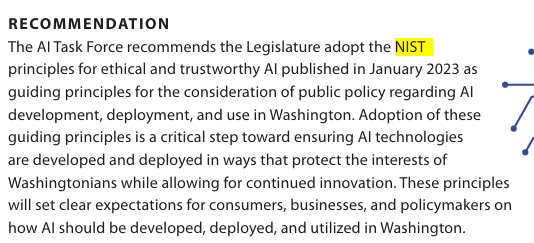

1. NIST Ethical AI Principles → HB 2157’s Mandatory Governance Frameworks

Task Force Recommendation: Adopt NIST’s Ethical AI Principles and Risk Management Framework.

HB 2157 Implementation: The bill requires developers and deployers of “high‑risk AI systems” to adopt risk‑based governance frameworks, conduct ongoing monitoring, and document mitigation strategies.

What was voluntary guidance in the Task Force report becomes mandatory compliance in HB 2157.

2. Transparency in AI Development → Developer Disclosure Mandates

Task Force Recommendation: Require public disclosure of training data provenance, quality, and diversity.

HB 2157 Implementation: Developers must disclose:

- Intended uses

- Known limitations

- Risks of discrimination

- Evaluation and testing summaries

- Mitigation strategies

- Documentation for deployers

This is the Task Force’s transparency agenda, now enforceable by law.

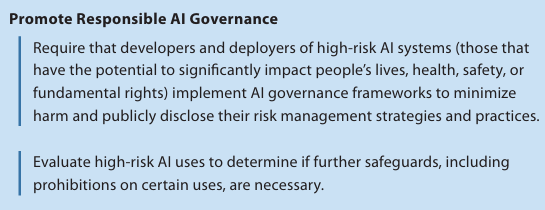

3. Responsible AI Governance → High‑Risk AI Regulation

Task Force Recommendation: Regulate “high‑risk AI systems” and consider banning certain uses.

HB 2157 Implementation: The bill defines high‑risk AI broadly, including employment, housing, healthcare, education, lending, and public services and imposes:

- Risk management programs

- Impact assessments

- Ongoing monitoring

- Consumer disclosures

- Adverse‑action explanations

This is the core of the Task Force’s regulatory vision.

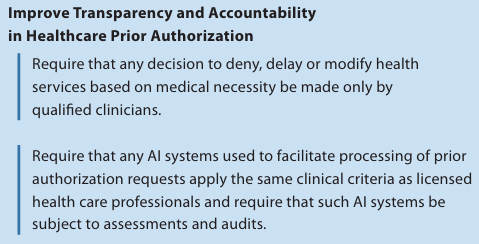

4. Healthcare Prior Authorization → High‑Risk Healthcare AI

Task Force Recommendation: Require clinicians to make final decisions, not AI systems.

HB 2157 Implementation: Healthcare AI is explicitly treated as high‑risk, triggering:

- Mandatory impact assessments

- Documentation requirements

- Human‑in‑the‑loop decision‑making

The Task Force’s healthcare section is now codified.

5. Workplace AI Guidelines → Employment AI Regulation

Task Force Recommendation: Create workplace AI principles and oversight.

HB 2157 Implementation: Any AI used in hiring, screening, evaluation, or promotion is automatically classified as high‑risk, meaning:

- Employers must conduct impact assessments

- Applicants must receive disclosures

- Adverse decisions must be explained

- Employers face liability for “algorithmic discrimination”

This is a sweeping new regulatory burden on Washington employers — including small businesses.

The “Bias Testing” Agenda Becomes Enforceable Law

The Task Force’s most ideological component, bias testing, is now embedded in HB 2157’s core enforcement standard.

The bill requires developers and deployers to use “reasonable care” to prevent algorithmic discrimination, defined across:

- Race & ethnicity

- Gender

- Socioeconomic status

- Geography

- Healthcare outcomes

- And other protected classes

This is the same political definition of “bias” the Task Force used — not a neutral scientific standard.

And because the bill creates a private right of action, individuals can sue businesses directly for alleged algorithmic discrimination.

This is not guidance. It is a litigation framework.

Who Is Affected? (Hint: Not Just Big Tech)

HB 2157 applies to any employer or business using AI to make “consequential decisions,” including:

- Hiring

- Housing

- Healthcare

- Lending

- Education

- Insurance

- Public services

There is no size threshold for deployers. A five‑person business using an AI résumé screener is regulated the same as Microsoft.

This is exactly the kind of broad, government‑first approach the Task Force was designed to justify.

What We’ll Be Watching

As HB 2157 moves through the Legislature, we will be tracking:

- How “algorithmic discrimination” is defined

- Whether NIST frameworks become mandatory

- The scope of required impact assessments

- The burden on small businesses and employers

- The potential for litigation abuse

- Whether additional oversight offices are created

- How this compares to federal efforts like Trump’s AI Executive Orders and Hawley’s GUARD Act

Washington is choosing the most aggressive, equity‑driven AI regulatory path in the country — and HB 2157 is the first major step.

CLW Will Continue to Lead

We will continue exposing the ideological bias behind the Task Force and opposing legislation that threatens innovation, parental rights, and economic freedom.

Your support allows us to stay in this fight.